The effectiveness of artificial intelligence systems depends fundamentally on the quality and structure of data flowing through them. Strategic AI data pipeline design ensures optimal input preprocessing, intelligent processing workflows, and actionable output formatting that maximizes AI model performance while maintaining data integrity and business relevance. Organizations with well-designed AI data pipelines report 70% improvement in model accuracy and 55% reduction in processing time compared to ad-hoc data handling approaches.

Modern AI applications require sophisticated data pipeline architectures that handle diverse data types, scale with growing volumes, and integrate seamlessly with existing business systems while maintaining security and compliance standards.

Understanding AI Data Pipeline Architecture

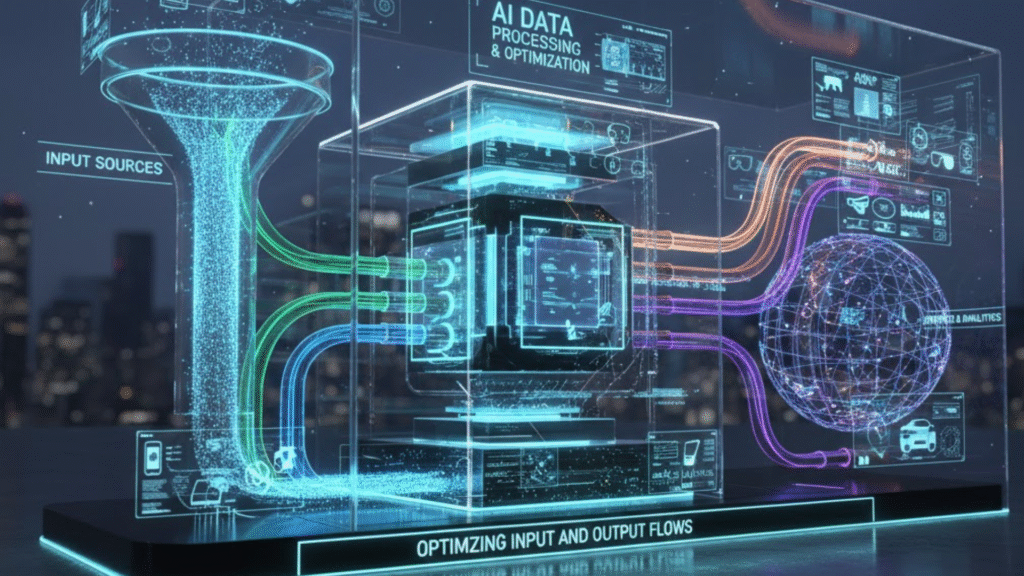

Effective AI data pipelines consist of interconnected stages that transform raw business data into AI-ready inputs, process information through appropriate models, and deliver formatted outputs that drive business decisions and automated actions.

Core Pipeline Components

- Data Ingestion: Collection from multiple sources including databases, APIs, files, and real-time streams

- Preprocessing and Cleaning: Standardization, validation, and transformation of raw data into AI-compatible formats

- Model Processing: Intelligent analysis through appropriate AI models for specific data types and objectives

- Post-Processing: Result formatting, validation, and enhancement for business consumption

- Output Delivery: Integration with business systems, dashboards, and automated workflows

Input Optimization Strategies

Data quality and format directly impact AI model performance, making input optimization critical for achieving reliable, accurate results that provide genuine business value.

Data Source Integration

- Unified Collection: Implement systems that aggregate data from CRM platforms, marketing tools, customer service systems, and external data sources into cohesive datasets

- Real-Time Processing: Enable streaming data integration for time-sensitive applications requiring immediate AI analysis and response

- Historical Data Integration: Combine current data with historical patterns to provide AI models with comprehensive context for improved accuracy

Preprocessing Excellence

- Data Standardization: Ensure consistent formatting, units, and structures across diverse data sources before AI processing

- Quality Validation: Implement automated checks for data completeness, accuracy, and consistency that filter problematic inputs

- Context Enrichment: Enhance raw data with additional metadata, relationships, and business context that improves AI model understanding

Format Optimization

- Model-Specific Formatting: Tailor data presentation to optimize performance for specific AI models and processing requirements

- Batch vs. Stream Processing: Choose appropriate processing methods based on latency requirements and data volume characteristics

- Resource Efficiency: Optimize data formats and compression to minimize processing time and computational costs

Processing Workflow Optimization

Intelligent processing workflows route different data types through appropriate AI models while managing resource allocation, processing priorities, and error handling for reliable system performance.

Model Selection Logic

- Data Type Routing: Automatically direct text, image, audio, and video data through specialized AI models optimized for each content type

- Complexity Assessment: Route simple vs. complex processing tasks through appropriate models to balance accuracy with efficiency

- Performance Monitoring: Continuously evaluate model performance and automatically adjust routing based on accuracy and speed metrics

Parallel Processing Architecture

- Concurrent Operations: Process independent data streams simultaneously to maximize throughput and reduce overall processing time

- Resource Load Balancing: Distribute processing load across available computational resources for optimal system utilization

- Priority Queue Management: Implement intelligent queuing that prioritizes high-importance data processing based on business rules

Output Flow Optimization

Processed AI results must be formatted and delivered in ways that integrate seamlessly with business workflows, decision-making processes, and automated systems.

Result Formatting

- Business-Ready Outputs: Transform AI model results into formats that business users can immediately understand and act upon

- Structured Data Delivery: Provide consistent output schemas that integrate reliably with downstream business systems

- Confidence Scoring: Include accuracy indicators and confidence levels that help users understand result reliability

Integration and Delivery

- API Endpoints: Provide standardized interfaces that enable easy integration with existing business applications and workflows

- Real-Time Notifications: Trigger immediate alerts and actions based on AI analysis results that meet predefined criteria

- Batch Report Generation: Compile AI insights into comprehensive reports for strategic decision-making and performance analysis

Scalability and Performance Considerations

Production AI data pipelines must handle increasing data volumes while maintaining processing speed, accuracy, and cost efficiency as organizations grow and requirements evolve.

Horizontal Scaling

- Distributed Processing: Implement architectures that can add processing capacity by distributing workload across multiple systems

- Auto-Scaling Logic: Automatically adjust computational resources based on data volume and processing demand patterns

- Cost Optimization: Balance processing speed with computational costs through intelligent resource allocation strategies

Performance Monitoring

- Pipeline Metrics: Track data throughput, processing latency, error rates, and resource utilization across all pipeline stages

- Quality Assurance: Monitor output quality and accuracy to identify degradation that requires pipeline optimization

- Bottleneck Identification: Systematically identify and resolve processing constraints that limit overall pipeline performance

Security and Compliance in Data Pipelines

Enterprise AI data pipelines require robust security measures that protect sensitive business data throughout the entire processing workflow while maintaining compliance with industry regulations.

Data Protection

- Encryption Standards: Implement end-to-end encryption for data transmission and storage throughout the pipeline

- Access Controls: Maintain role-based permissions that limit data access to authorized personnel and systems

- Audit Trails: Generate comprehensive logs of data processing activities for compliance reporting and security analysis

Qolaba AI: Model-Agnostic Pipeline Excellence

Qolaba AI’s access to 60+ specialized AI models across text, image, video, and speech processing enables optimal data pipeline design by routing each data type through the most effective AI model for specific processing requirements. The platform’s model-agnostic approach ensures pipelines can leverage cutting-edge AI capabilities without being locked into inferior tools, while seamless API integration enables connection with existing data sources and business systems.

The credit-based pricing model scales naturally with data processing volumes, making it cost-effective for organizations with varying pipeline demands, while enterprise-ready security features ensure data protection throughout the entire processing workflow.

Best Practices for Pipeline Implementation

Successful AI data pipeline implementation requires systematic planning that balances performance requirements with resource constraints while building capabilities that evolve with organizational needs.

Effective organizations start with pilot implementations that demonstrate value before scaling to production volumes, implement comprehensive monitoring and alerting systems, and maintain pipeline documentation that enables team collaboration and troubleshooting across technical and business stakeholders.

Ready to optimize your AI data pipelines with access to 60+ specialized models? Explore Qolaba‘s comprehensive platform and build efficient data processing workflows that scale with your business while maintaining security and performance standards.